Why an Intelligent Documentation Chatbot?

In today's fast-paced digital environment, users expect instant answers and seamless experiences. When navigating complex web applications, they often need guidance. Traditional FAQs and extensive documentation pages can be cumbersome to sift through. Enter the intelligent documentation chatbot: a powerful tool that integrates directly into your web application, providing real-time, contextual assistance by leveraging your existing knowledge base.

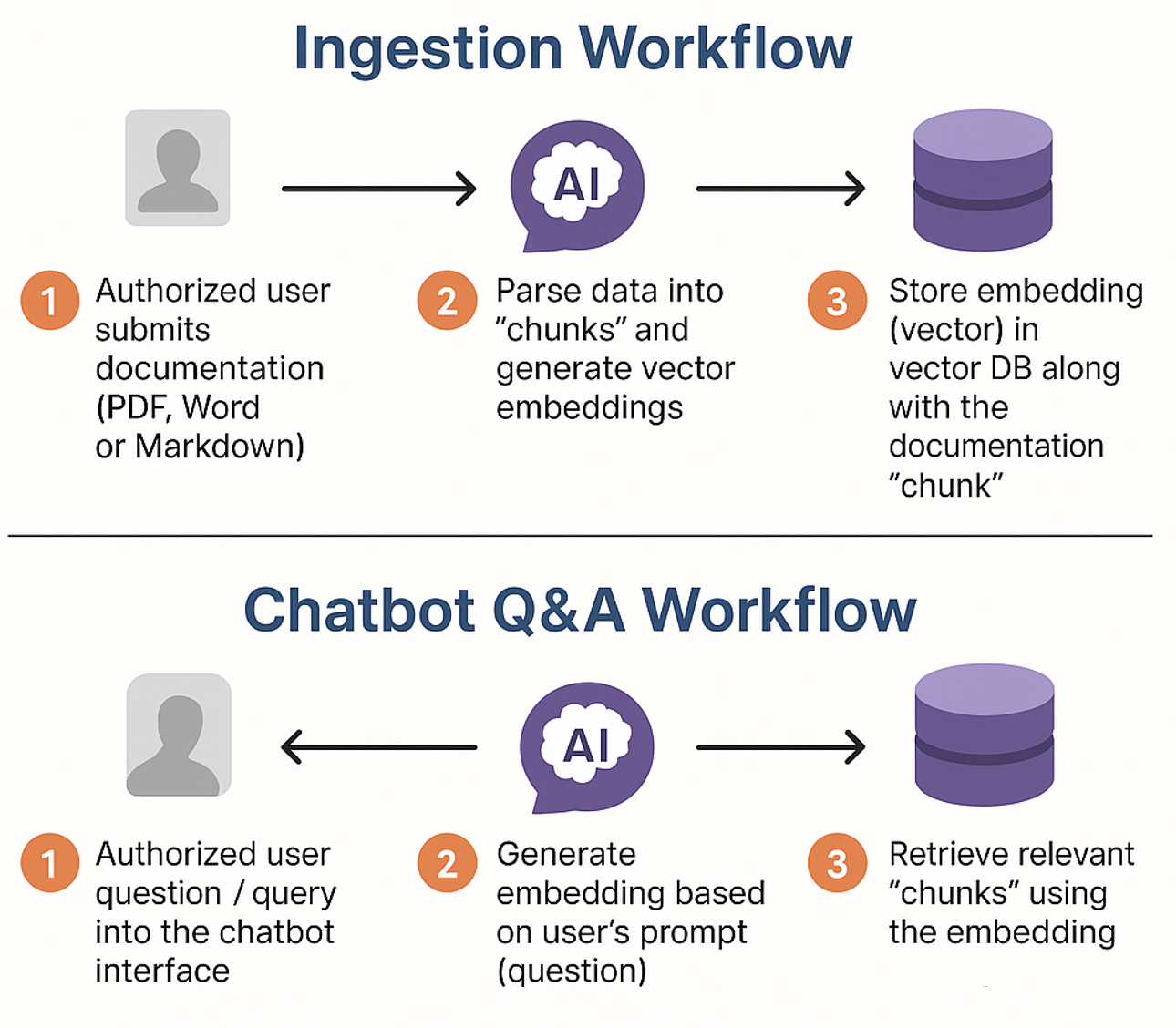

This article explores how to build and integrate such a chatbot, using a robust architecture that ensures accurate and relevant responses. Our approach, as detailed in the accompanying diagram, involves two main phases: a Documentation Ingestion Pipeline and a Chatbot Q&A Workflow.

Phase 1: The Documentation Ingestion Pipeline – Building the Brain

1. Secure Document Submission: Authorized administrators access a dedicated interface to upload application documentation. This can include various formats like PDFs, Word documents, or Markdown files, ensuring all existing knowledge assets can be utilized.

2. Intelligent Parsing and Embedding Generation:

- The uploaded documents are processed by a backend system (referred to as the "IMP Application"). This application first parses the data, breaking it down into smaller, manageable "chunks" of text. This chunking strategy is crucial for providing focused context to the AI later.

- For each chunk, the system generates Vector Embeddings. These are numerical representations of the text's semantic meaning, created using an AI model. Think of them as unique fingerprints that capture the essence of each piece of information.

3. Storing Knowledge in a Vector Database:

- These generated vector embeddings, along with their corresponding original text chunks, are then stored in a specialized Vector Database (e.g., qDrant). Vector databases are optimized for incredibly fast similarity searches, allowing the system to quickly find the most relevant information based on semantic meaning, not just keyword matching.

With the documentation processed and stored, the chatbot's knowledge base is established and ready.

Phase 2: The Chatbot Q&A Workflow – Delivering Instant Answers

1. User Interaction: An authorized user within your web application encounters a question or needs assistance. They type their query into the chatbot interface, which is seamlessly integrated into the web page.

2. Query Understanding and Embedding:

- The user's question (prompt) is sent to the IMP Application.

- Just like with the documentation, the system generates a vector embedding for the user's query using the same AI model. This ensures that the query and the stored documentation chunks are represented in the same "semantic space."

3. Retrieving Relevant Context:

- The embedding of the user's query is then used to search the Vector Database. The database efficiently compares this query embedding against all the stored document chunk embeddings.

- It retrieves the "chunks" of documentation that are most semantically similar (i.e., most relevant) to the user's question.

4. Generating and Delivering the Answer:

- The original user's prompt, along with the retrieved relevant document chunks (the "additional context"), are sent to a powerful Large Language Model (LLM) or a similar AI model.

- This model then synthesizes the information – understanding the user's question and using the provided context from your documentation – to generate a coherent, accurate, and helpful answer.

- This answer is then returned to the user through the chatbot interface in real-time.

This process is a form of Retrieval Augmented Generation (RAG), where the LLM's generative capabilities are grounded by factual information retrieved from your specific documentation, leading to more accurate and trustworthy responses.

Highlights

- 24/7 support – no more waiting for help.

- Reduce support workload – let the chatbot handle common questions.

- Easy to use and set up – works with your existing documents.

- Helps your team or customers find what they need, fast.

Integrating the Chatbot into Your Web Application

- Simple Snippet: A lightweight JavaScript snippet is added to your web application's codebase.

- Selective Placement: This snippet allows developers to place the chatbot interface on selected views or pages within the application. This means you can strategically offer assistance where users need it most.

- Seamless Interaction: When a user interacts with the chatbot icon or window, the JavaScript handles communication with the backend (the IMP Application) to trigger the Q&A workflow described above.

Conclusion

Integrating an intelligent documentation chatbot into your web application, powered by vector embeddings and a robust Q&A workflow, transforms how users interact with your platform. By providing instant, accurate, and context-aware assistance directly from your own documentation, you can significantly enhance user satisfaction, improve efficiency, and unlock the full potential of your application's knowledge base. This isn't just a chatbot; it's a smart assistant dedicated to empowering your users.

How We Help

End-to-end services to build and integrate intelligent documentation chatbots

Our engagement covers every step, from strategy and ingestion pipeline setup to seamless integration and ongoing optimization.

Strategy

- AI Opportunity Assessment

- Knowledge base audit

- Integration planning

Implementation

- Ingestion pipeline setup

- Vector database configuration

- Custom chatbot UI

Integration

- JavaScript snippet integration

- Contextual placement

- Performance optimization

Enablement

- Admin training

- Analytics & improvement

- Ongoing support